Why books?!

Why is it easier to start writing some random ramblings than it is to pick up a book? It's not for lack of skill, I know I read plenty of books in my younger years. Not even that long ago, reading a book seemed like a possible prospect. Now it's more of a daunting project or even worse: a chore that I just know I'll never get around to finish. So why start at all?

Why do I even let it bother me so much? Does the idea of reading something appeal more than actually reading it? Why do I feel so compelled to read stuff when I've clearly made my mind up to not do so and distract myself with other things? I wish I could just firmly decide which side of the fence my brain should land on already. Either don't read books and don't bother thinking about what I'm missing out on. Or, do read them... stupid brain.

I think part of the problem is a fondness of reading books in the past. I know they used to hold a sort of spell on me where I couldn't put them down. This would mostly apply to fiction in my case. The books on my ever growing “to read” list nowadays is usually something “deep, useful, and real” or whatever. I guess they probably are all those things, but it's like I know I'm not going to be as drawn into a world of facts the same way.

Another part of the puzzle is probably the romanticization of “reading a book” these days (just like that of traveling). I hear all the time that “our attention spans have shortened”, but has it? I do agree that information is more readily available nowadays, and that coupled with personalized data streams of varying quality means it places a fairly large filtration burden on your average modern human. But does this mean we're screwed over by our own data production and availability?... I don't think so. Learning and adapting is supposed to be one of our strengths. What I do believe in however is that it's very easy to learn an unhealthy pattern of behaviour, and subsequently stick to it. What puzzles me is that even though I know things are bad for me, why do I even do them? And it's not even like a drug that releases endorphins immediately, but it's just something as simple as “not doing the hard things today”. Why should that be my undoing? Also, it feels more like a robbery than something I had any agency over, someone came into my brain and stole the piece of it that let me appreciate a “job well done”... put that back! How am I supposed to motivate myself to do the right things when doing them no longer seem to have any effect on me huh?! (shakes fist at imaginary thief).

Now just before I forget to list them out, as if to prove my existence to myself a little harder. I like the following things:

- making music (which I'm also not doing much of lately, but it's great fun!)

- drawing (which I am actually doing on a semi-regular basis!)

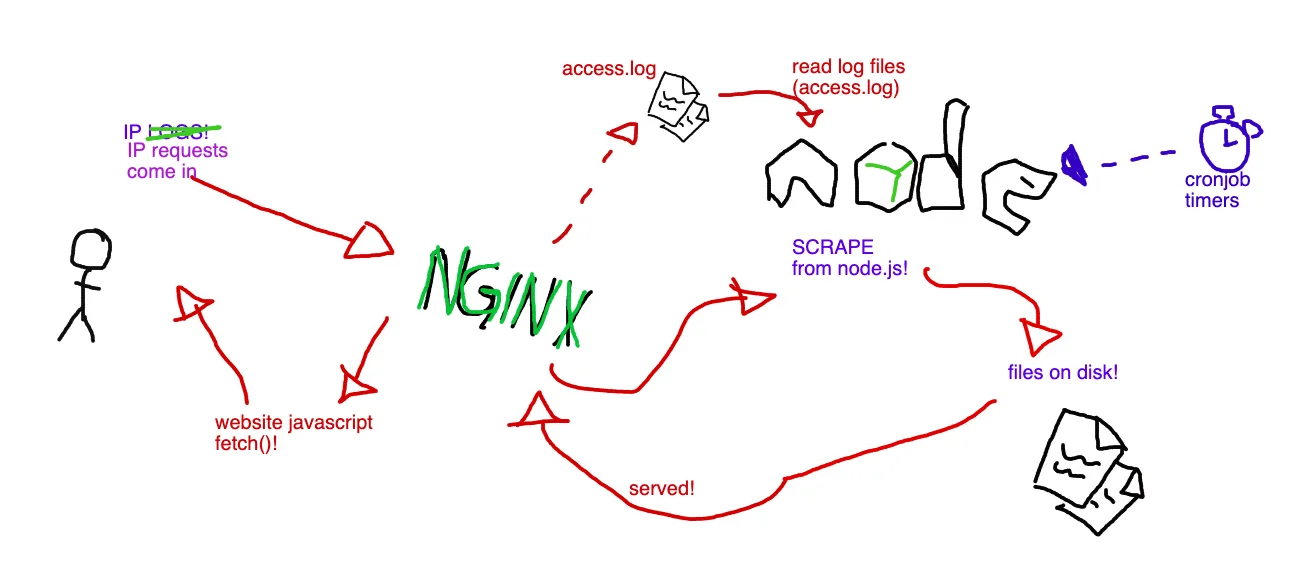

- computers & whatnot (quite rewarding, gives you a “THING” you can run and show and share! Am I a purely externally motivated person?! Even if the “THING” isn't that great or useful, it's almost always rewarding in retrospect!)

- walking (usually this means I avoided taking a bus, which means I don't get to feel nausea 🎉, which makes me feel kinda good! It already sets the pretense that the walk has already served its purpose regardless of what my brain does on the walk, or what the body gets in terms of health benefits etc... just all round good stuff!)

- working... it's a bit crazy, but sometimes work these days is... not that bad?! kinda ok'ish? I can on some occasions get into what I could only call “flow state” and forget about myself completely! I even forget to eat and to listen to the hunger pangs of my body! That part of work is amazing! I guess in short: When I am so distracted by something that I can't think about my own existence... life is great?! Hmmm. Regardless... I'll take it! give me flow state every workday of the week! (it doesn't quite work like that....yet?)

- Silly hobby things, like growing algae... if you had asked me about algae growing a couple months ago I would have looked at you strange. Now it's just really... fun?! 🎉 Taking apart a bike (two actually) in the hopes of making a functioning e-bike from the pieces of the two half-functioning ones (also with an e-bike conversion kit). Breaking an air purifier while trying to test the fan separate from the rest of its electronics (putting 12v where they shouldn't) and then getting the idea of re-creating the air purifier, but as a SMART air purifier instead! (I have all the pieces needed in theory, the filter stuff is passive, the case, and the working fan, add some “smarts” and it should all work wonderfully!)... anyways, it's all in pieces next to the pieces of the bikes on my floor at the moment. (Future me here: I did in fact finish assembling that working bike! what a rush! I threw out the leftover pieces, as well as the broken air purifier... ain't nobody got time for that.)

- Listening to music! (I know I earlier said “making music”, but that's why I waited a bit before listing this one, to make it seem like 2 completely separate things!) It's a good day almost any day where I get to lose my brain in music... usually it gets me thinking (usually if the music / song has some semi-deep lyrics) and it's amazing to just ponder about stuff in and outside the lyrics. Sometimes I guess it makes me fantasize about making my own song / lyrics too! How neat wouldn't that be?!

I've got this collection...

I've managed to scrape together a banger collection of books that my corpse should absolutely read through. The books are... how do I put it... classics mixed with highly recommended books most readers consider “worth it” (like sapiens by Harari). I made the list thinking they would most definitely make me a better person. But then there's just the act of reading all those words... I even did start on some of these books too! and I even appreciate the pieces that I did manage to read greatly! read more!... read more? ah... you're done now? ok!

What!?

Wacky words by

Tegaki